December 20, 2018 – The state of quantum computing is about to be shaken up by Google according to an editorial appearing in Nature two days ago. In the piece, it states “one of the company’s [Google] labs, in Santa Barbara, California, has promised that its state-of-the-art quantum chip will be the first to perform calculations beyond even the best existing supercomputers.”

Raising expectations that quantum computing has finally arrived to replace the high-speed computing systems used today may be premature. Software continues to evolve on classical computing platforms that will substantially extend the capabilities and capacity of current technology with artificial intelligence (AI) and machine learning leading the way. In fact, researchers believe they can emulate the way quantum computers work on classical computing systems making the rush to the former and the displacement of the latter irrelevant.

The editorial concludes that quantum computers remain a “not-yet-existent technology in search of problems to solve.” Whereas, classical computers with AI are going gung-ho at tackling the real problems humanity face.

In fact, there are those who would argue that quantum computing remains beyond our current technological capability. According to Professor Mikhail (Michel) Dyakonov, of the Laboratoire Charles Coulomb, Universite de Montpelier, writes in an article which appeared in the November 15, 2018 edition of IEEE Spectrum, entitled “The Case Against Quantum Computing,”

“The number of qubits [quantum bits] needed for a useful quantum computer, one that could compete with your laptop in solving certain kinds of interesting problems, is between 1,000 and 100,000…That’s a very big number indeed. How big? It is much, much greater than the number of subatomic particles in the observable universe.”

Error correction in a quantum computer is so complex that engineers would quickly lose interest in the technology, states Dyakonov. He goes on to pour cold water on the claims by quantum computing theorists that errors per qubit per quantum gate can be handled using the threshold theorem which states that the physical error can be kept to low levels through the application of quantum error correction schemes which use logical qubits.

But Dyakonov points out that you need many physical qubits to create a logical qubit. How many? “No one really knows, but estimates typically range from about 1,000 to 100,000,” which means a quantum computer containing a million qubits.

A reality check: Google’s quantum computer, Bristlecone, runs on a 72-qubit gate-based computer processor. That’s up from its previous 9-qubit system, and considerably larger than the runners-up at IBM with their 50-qubit processor.

And then there is D-Wave, the Burnaby, British Columbia-based quantum computing company which claims to have a 2,048 qubit device and it open-source library of GitHub software for beginners. Already on the market, the 2000Q. D-Wave is claimed by the company to be the first commercial quantum computing system combined with software to do real work. D-Wave systems are in use at NASA, Volkswagen, Los Alamos National Laboratory, Lockheed Martin, USC, and Google itself, although the latter isn’t mentioning D-Wave in making its claim to 2019 as the year when quantum computing goes mainstream. And when you consider that D-Wave systems at 2,048 qubits are at Dyakonov’s lowest threshold for useful computing that is not subject to error, I think that might leave one with the impression that Google’s statement is a bit of an overreach.

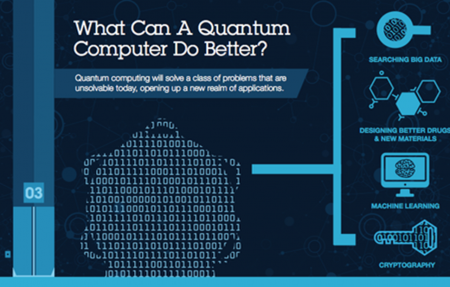

Today, what kind of practical problems are quantum computers solving? Here are a few examples, and I might add there aren’t many:

- finding the prime factor of numbers for code making and code breaking, and problem searches in big volumes of data in search of esoteric answers.

- computing the energy states of molecules.

- studying energy generation in photosynthesis to try and simulate the molecular interactions.

- understanding reaction mechanisms in complex chemical systems, for example, how nitrogenase fixes nitrogen in plants.

With the exception of the first one, it’s pretty clear that current quantum computing applications focus largely on the obscure rather than mainstream problem solving. So don’t expect quantum versions of Microsoft Excel or Word on that first quantum computing device that Google states may become available next year.