February 9, 2015 – If Google, Uber and others are going to make self-driving vehicles a reality everywhere then they have to get past one very large hurdle. Google in the last month admitted that its technology cannot handle snow. It appears the radar, computer vision and sensors can be confused or even blinded when the white stuff starts falling. Heavy rain can have a similar deleterious impact. And one other small snag in Google’s autonomous vehicle platform. It has trouble detecting potholes in the road. Can it adjust to the bone-jarring impact of driving into one? What will that do to the on-board sensors?

These challenges go along with other known deficiencies in current autonomous vehicle technology which include:

- an inability to drive in areas that have not been digitally mapped.

- an inability to deal with sudden temporary changes to road conditions like signage related to temporary closures or the appearance of a new stop sign or light not in the vehicle’s database.

- temporary blindness to the vision of an autonomous vehicle when driving directly into the Sun could mean missing a light or sign in the same field of view.

- inability to detect differences between objects spotted on the road surface – is it a rock or a piece of crumpled paper?

- knowing the difference between a pedestrian about to cross a street or someone standing at the side of the road and waving – both appear as motion but only one could actually lead to a collision and fatality.

These weaknesses will require improved sensors, better computer vision, exhaustive and continual digital mapping of all existing roads, faster up and downlinks, and better software.

Autonomous vehicles need a prescribed route today upon which to drive. All current tests and pilot projects are designed to use them this way. And all existing autonomous vehicle projects are limited to traffic only going in one direction. No autonomous vehicle has yet to have to deal with the fast flow of oncoming traffic and having to make a quick left turn (or in the case of drivers in the United Kingdom, a quick right one).

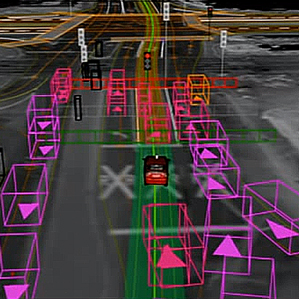

So although futurists see autonomous vehicles impacting the jobs of taxi and bus drivers, we are still a long way from that reality. For the moment the self-driving car is still a product in development as attested by the image below. Here we see how Google’s self-driving car sees moving objects in real time. But it can only see them when laid upon a pre-made map where stationary objects like traffic lights and stop signs are plotted. Change any one of these objects and the autonomous vehicle could be driving blind.