July 6, 2018 – When I drove my car this morning to pick up an order I had to be on the lookout for pedestrians crossing in the middle of the street, a squirrel doing what squirrels do when attempting to get across a road, another driver who didn’t signal but cut into my lane, making several right turns at red lights (allowed in Toronto) which meant looking for pedestrians crossing at the intersection while also studying the traffic coming towards me, and dealing with multiple traffic signs, posted speed changes, and the like. For me, this was a perfectly normal 15-minute commute. But for an autonomous vehicle to do what I did requires understanding the convention and rules while recognizing that the process of driving often presents unconventional occurrences.

Baking the “rules of the road” into the brain of an artificial intelligence is not easy. That’s because so much in driving is not just about hard-fast rules. Some we break to avoid hitting a vehicle. Some we break because common sense dictates that we should. Human drivers, as a result, cannot be relied on to behave predictably.

In an article that appeared in The Globe and Mail last February 5th, Jason Tchir asks the question “will self-driving cars understand the finer points of traffic etiquette?” He talks about pedestrians stepping off the curb into the street and making eye contact with a driver to get a nod for his or her okay to cross. It is hard to imagine that same scenario playing out with an autonomous vehicle (AV). How would a driverless car engage that pedestrian? The camera technology would see the person but a non-verbal cue like a nod would not be possible. Toyota, Nissan, and Ford have wrestled with this issue in their testing of AVs.

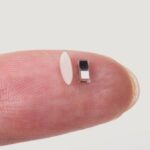

The Toyota and Nissan solution at present is to have their cars display text on the windshield informing the pedestrian that it is okay to cross. The Nissan concept with text display can be seen below with the words “after you” projected on the windshield. But think about this as a solution. It means in every market where Nissan sells its vehicles it has to have messages that display in the local language. But what if there are many local languages. In Canada, for example, the country has two official languages, French and English. In places like India with many official languages, how can an AV know which language to present to a crossing pedestrian?

The Ford solution is to use a bright light displayed at the top of the windshield. The light could serve two purposes. First, it could let the pedestrian know that a Ford car with this type of light is an AV. That doesn’t tell the pedestrian anything about having the permission to cross. But another scenario could where the light displayed produces a series of flashing patterns, a sort of Morse Code, that would tell the pedestrian it was okay to cross. Hardly an intuitive solution, it would require the pedestrian to know the meaning of the messages created in the blinking lights.

Another possible solution is to dispense with lights and word messages, and instead use icons which could be animated to march across an AVs windshield. For example, the permission for the pedestrian to cross would come in the form of an animated projection of an image of a person crossing the street. But even icons don’t account for every type of pedestrian. Would an icon, or a message, accompanied by sound be a better solution? Not when the pedestrian making his or her way across the street is blind or deaf, or both.

Paul Godsmark, Chief Technology Officer, the Canadian Automated Vehicles Centre of Excellence, is quoted in Tchir’s article stating “I think there’s value in the car telling pedestrians what it’s going to do, as long as we come up with a national standard.” It is this idea of developing a national standard that is discussed at length in a May 12th, 2018 issue of The Economist, in an article entitled “Robotic rules of the road.” In it the writer asks if the rules of the road can be defined mathematically.

A number of companies are trying to do just that. Both Mobileye, and Voyage, two companies developing AV software have published papers on the subject. Mobileye’s is entitled “Responsibility-Sensitive Safety” while Voyage’s is entitled “Open Autonomous Safety.” In both the effort is to apply mathematical rules to vehicle activities. Both companies have proposed that these beginning efforts become the basis for an open industry standard to which other companies can contribute.

Even with an open standard will it cover the cultural nuances of what a honk means to drivers in different cultures? Or will it understand different driver hand gestures, both polite and impolite? And what about cars with bumper stickers or those waving, bobbing figurines that festoon the back windows of some vehicles? Or the stuff people hang on car mirrors? This is human behaviour that is far more difficult to mathematically quantify for an AV than a simple lane change or merge into traffic.

Undoubtedly, once an acceptable standard becomes common to all AV developers the act of driving will become far safer. By how much? In a recent study published by the RAND Corporation, concluded that if AVs were only 10% safer than human drivers, more than a half million lives would be saved over a 30-year period.