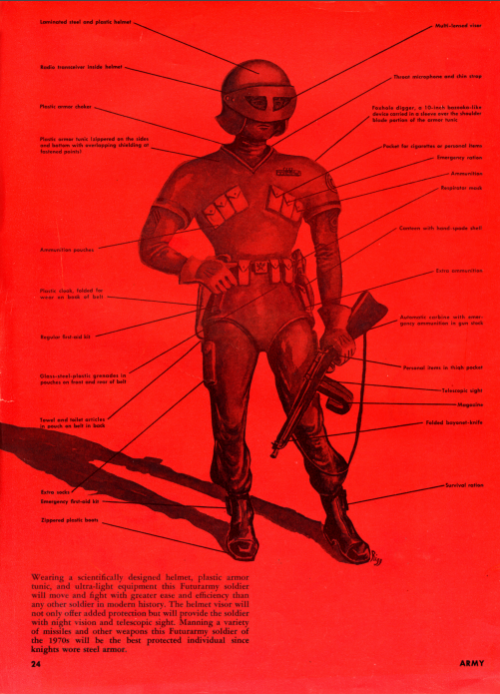

March 10, 2017 – In 1956, Lieutenant Colonel Robert B. Rigg, serving in the United States Army, wrote a paper entitled, “Soldier of the Futurarmy.” In it he described an army of fighting men outfitted like supermen with every square centimeter of the “futurarmy” soldier’s clothing and kit, as Rigg described, scientifically amped up for defense and offense. Seen below, Rigg’s soldier looks more like a fanciful medieval knight than soldiers today.

Rigg argued that the futurarmy would still rely principally on humans and not machines. That the soldier will “live, move and fight amid amazing weapons and machines – nuclear-powered helicopters, flying tanks, flying platforms, flying artillery, missiles, drone devices and even mechanical spies.” He describes “basket-sized capsules” dropped into enemy territory to scout out the enemy, make weather observations, locate disposition of troops, and more, transcribing observations into Morse Code and transmitting the gathered intelligence at rates of 17-words per minute. For Rigg the future soldier would be fighting in 1974. The reality of the Vietnam War saw the American military adopt very little of what Rigg envisioned. And, of course, he didn’t see the development of autonomous robots capable of operating on a battlefield.

Enter Mad Scientists

Today the American Army under the Training and Doctrine Command (TRADOC) has launched the Mad Scientist Initiative, an alliance of the military, academia, and business, focused on military operational innovation. Mad Scientist looks at a range of technologies including:

- advancements in human and cognitive sciences

- artificial intelligence, autonomous and semi-autonomous weapon systems and operations

- battlefield communications and information processing technology

- implementing light-weight materials

- battlefield power generation

- precision weapon systems for surface-to-air, air-to-surface and surface-to-surface fire

- vertical takeoff and landing technology and unmanned aerial systems

At a recent Mad Scientist conference, the army reviewed the changing landscape of military confrontations and concluded the need to invest in quantum computing, tactical Internet of Things (IoT) systems and devices, and machine-to-machine learning to gain intelligence and combat in the field advantages over enemy combatants in diverse scenarios.

Future armies, stated those attending Mad Scientist, will need to take into consideration the rise of megacities as the likely environment of future wars. These cities would be densely populated and would act as havens and support bases for insurgents, criminal organizations, and terrorists. Engagement in such difficult environments would require better situational awareness, and new technologies of maneuver above and below, within urban areas.

Cyber intrusion would be combatted by advanced computing power, hence development of quantum computers capable of withstanding the most sophisticated hacking efforts.

But like Rigg, who saw the soldier as the central tenet of military operations, Mad Scientist puts the skill of soldiers first as the key asset in achieving dominance over adversaries.

What the Army of the Future Will Look Like Based on What We See Today

On the battlefields of 2030 and beyond expect to see an army with very few human personnel surrounded by a panoply of technology capable of extending the capability of each soldier deployed in the field. Reconnaissance on the ground and in the air, done by robots and drones, will provide unprecedented situational awareness. Delivery drones will keep soldiers resupplied. Drones above the battlefield will not only provide in situ intelligence but be capable of firing on enemy combatants as directed by soldiers. Drones and on ground robots will talk to each other and to the humans they are designed to support. The key to all of this will be the development of software applications and communications technology capable of withstanding enemy technological interference. The U.S. Army is hell bent on developing a robotics operating system to support this vision.

Robert Sadowski, chief roboticist at the Tank Automotive Research, Development and Engineering Center (TARDEC), describes the need to build a comprehensive app store for robots and autonomous systems. He still sees the soldier as central to the plan and when he describes robotic capability states, “We’re always going to have Soldiers involved in the process. You don’t put treasure on the road without some sort of security….We’re not going to have unmanned Terminator robots roaming around on the battlefield.”

What About the Other Side?

Will the enemy be agreeable to limiting its military operations to human controlled weapons only? Both Russia and China may have a different perspective and both countries are making investments in robotics and autonomous weapon and surveillance systems preparing for a future roboticized battlefield. In a 2015 article appearing in Tech Insider, Valery Gerasimov, Chief of the Russian General Staff is quoted stating, “In the near future, it is possible that a complete roboticized unit will be created capable of independently conducting military operations.” Russia has been working on sentry robots charged with protecting missile installations and recently demonstrated. China’s foray into autonomous weapon systems includes tactical missiles with built-in intelligence capable of seeking out targets during combat operations. Will Chinese efforts go further? That remains a big unknown.

Should Autonomous Weapons Be Universally Banned?

In light of the potential threat of killer robots deployed on future battlefields, those in the field of artificial intelligence research have mounted a campaign. In 2015, over one thousand researchers issued a letter calling for a ban on weapon systems that use AI to make killing decisions.

In the letter, the signatories stated, “Just as most chemists and biologists have no interest in building chemical or biological weapons, most AI researchers have no interest in building AI weapons and do not want others to tarnish their field by doing so…..Starting a military AI arms race is a bad idea and should be prevented by a ban on offensive autonomous weapons beyond meaningful human control.” Among those signing the letter is Stephen Hawking, Elon Musk, Steve Wozniak, Noam Chomsky, and Peter Diamandis. Since the letter’s publication 3,105 AI and robotics researchers, and over 17,000 others have added their names. But according to an article that appeared in The Verge, at United Nations-sponsored conferences related to lethal autonomous weapons, only five countries support such a ban.

The justification for not banning the technology is as follows:

- it is believed that robotic weapons will mean far fewer human casualties

- only the robot is at risk on the battlefield, not soldiers

- robots can take on greater risk than humans

- robots are more accurate than humans

The countries that supported the ban in that 2014 conference included Cuba, Ecuador, Egypt, Pakistan, and The Vatican. Since that conference the number supporting a ban has expanded to 19.