A few years ago I bought a tablet that used a special pen and ordinary writing paper. I would place the paper in the holder on the tablet surface and take notes, draw pictures, pie charts and graphs. When I was finished a page I would insert a fresh piece of paper and press the tablet button to create a new page. When I plugged the tablet into my computer it would save my notations as a file and then translate my handwriting into text and my pictures into finished graphics. It learned my handwriting style and after awhile got pretty good at it.I eventually abandoned this technology in favour of a the traditional three-hole punched wire-bound notebook because there were too many steps in the technology to make it productivity enhancing. I found I could type my hand-written notes faster. And often the handwriting recognition proved to be less than accurate.

But in using this technology it got me thinking about the way we use technology to communicate, and about those with disability. In my last blog on communications I talked about the world of the blind and both the science and technology that gives visually impaired people a means to sense the visual world around them. In this blog I look at the world of the hearing impaired and the technologies that are currently developed or under development to make it possible to communicate for those who cannot hear.

Breaking Down Barriers Between the Deaf and the Rest of Us

What is the population of the world if we only counted those who are deaf or mute? Would it surprise you to find out it exceeds the total population of Canada? In fact the number is estimated to be 40 million. Many who are deaf have learned sign language. Some speak aloud but cannot hear themselves. But many who are deaf find it extremely challenging to communicate with those of us who are not.

In past blogs focused on biomedical technologies I have described cochlear implants and other devices to help create the ability to hear for those born with or who have acquired hearing loss.

Today we have technologies such as video phones to allow the deaf to visually sign to each other through the traditional telephone network. But how can deaf people be heard by those who can hear ? Is there technology that can break down the barriers that divide the hearing from the 40 million who cannot? The answer of course is yes and you are about to learn about these interesting 21st century technological advances.

Communication through Texting

UbiDuo is a communications device for the deaf that is portable and wireless and facilitates conversation through computer keyboards and display screens. The technology uses telephone networks and its own local wireless network technology to accommodate up to 4 users in a chat conversation.

But UbiDuo doesn’t speak aloud for persons who are deaf. For that the deaf have traditionally used human interpreters who know signing and speak for them. Technologies like screen readers can serve to vocalize words typed on a screen when integrated into conventional chat applications. But many screen readers do not work with chat applications because of the restrictions by the tools used to build them. And every time there is an extra step required to turn text back into speech, it is just like the experience I had with my handwriting electronic note pad, the delay creates an unacceptable break in the conversation. So a device like UbiDuo may help facilitate communication but true spontaneous speech between deaf and hearing people is not possible with this type of technology. That is one reason why a number of research teams have set out to create an interface for deaf people to communicate through signing.

Making it Possible for Everybody Signing to Talk to Everyone Else

What if we could use modern sensory technology to capture sign language and turn it into spoken words? That is what a number of researchers at a university in the Ukraine have been exploring. They call their prototype Enable Talk.

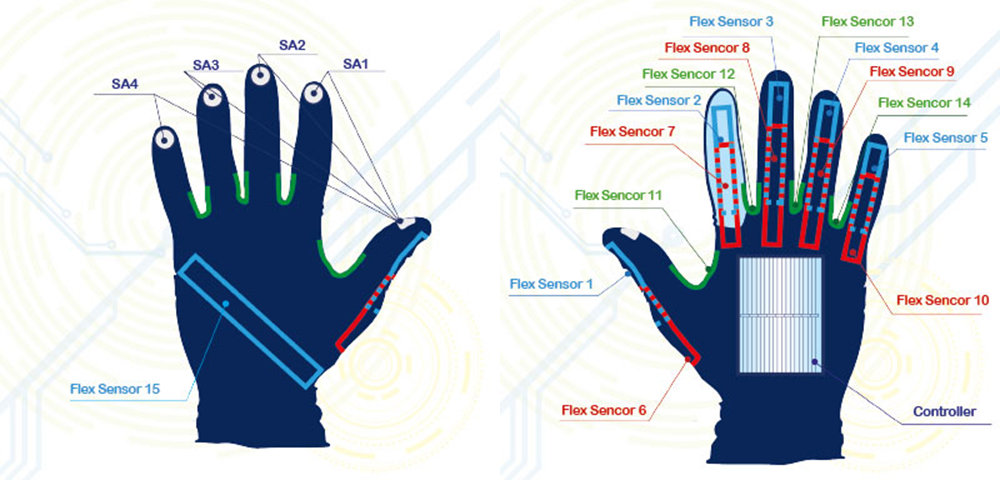

Enable Talk uses specially designed gloves (see image below) to convert signing into spoken words. Each glove is lined with a dozen or more flex and touch sensors, a compass, gyroscope, and altimeter. This battery of tools detects motion, direction and position in space, relaying the information gathered to a controller in the back of each glove. The controller translates the signs and through its Blue-tooth communication interface transmits output to mobile smart phones where it becomes speech. The gloves are powered by lithium-ion battery packs and built-in photovoltaics. Like any world language, signing varies from country to country. So the developers have given the gloves the means to learn and store new signs incorporating them into a language library.

Enable Talk is not the only technology being developed that uses gloves to translate sign language into written and spoken words. Watch this YouTube video from a TED conference that demonstrates a glove using fiber optics and light sensors to capture signing. The technology is being developed by a team at University of Montreal and Polytechnique Montreal, in Canada. A user wearing these gloves signs and has a computer or mobile device translate the motions into words and sounds. The team is building the technology to allow it to recognize signs from multiple languages.

Sign-to-Sign Through the Web

Using the Internet with sign language involves recording the signer. That eliminates anonymity from any contribution to a wiki, blog, comment or chat. What if it were possible to build a set of applications for a sign-to-sign web interface? That is precisely what a group of European universities are attempting.

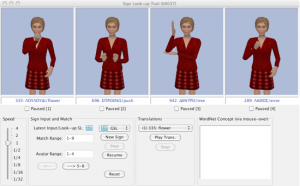

Dicta-Sign is a collaborative project involving universities in Greece, Germany, the United Kingdom and France. They are working on a three-year schedule to deliver a final product by the end of 2012, this year. The project’s goal is to make online sign language communications ubiquitous. Signers using Dicta-Sign can converse in sign language and create editable web content. The content appears as an anonymous avatar using sign language. That means anyone who knows sign language can alter, or expand on any sign language contribution made to web content with the avatar providing the output for other signers to read and add their thoughts.

Dicta-Sign uses technologies currently common to the web and tweaks them. It is accessible from any desktop, laptop, netbook, tablet or smart phone. The project currently has three application prototypes:

- Search-by-example – a tool trained to identify and match signs to an existing library

- Look-up – a tool able to display signs in several sign languages

- Sign Wiki – a collaborative online environment for creating sign language documents

We live in a remarkable era, a point in time where we have technology at hand that has the means to empower all members of our global community to interact, breaking down barriers as never before and building bridges to members of humanity who have often been left out of the mainstream.

As we continue to explore the evolution of communications in the 21st century I will in Part 5 look at the technologies that are allowing us to communicate directly with those locked in and previously unable to communicate because of catastrophic illness, accident or disability.

Please join the conversation by commenting, contributing questions, and providing ideas for future blog entries. And thanks for coming by for a read.