Peter Diamandis of the XPrize and Singularity University in a recent e-mail writes, “Artificial Intelligence (AI) is so fundamental that it’s likely to have a binary outcome.” He states there will be two kinds of companies at the end of this decade: those fully utilizing AI, and those that are out of business. He goes on to list the reasons for concern and reasons to relax.

Concerns About AI

- Why in the world did the OpenAI Board fire the company’s CEO, Sam Altman? This $90 billion question will no doubt be a pay-per-view movie in the next 3 years. Did early evidence of Artificial General Intelligence (AGI) spook the board? Whatever the reason, that the world’s most powerful AI company experienced such turbulence is a concern. It’s a reminder that when dealing with AI, we have to pay attention not just to the technology, but also to the people developing and controlling it.

- Eight years ago, Cambridge Analytica with a sizeable budget interfered in the 2016 U.S. presidential election. If a company back in 2016 when AI was far less developed, could have such influence, what should we expect to happen in the upcoming 2024 election when a high school student using today’s free AI tools could cause significant havoc?

- Could AI leave an entire generation of college graduates looking for work? Will the market for skilled labour disappear? On top of the trauma and disruption of the last 3+ years from COVID-19, could advanced economies be facing an employment crisis? Can we retrain or upskill the labour force in time? Is it time to introduce universal basic income plans?

- We have every reason to be concerned about the erosion of truth and trust because of the dystopian use of AI to give bad actors and malicious individuals the tools to generate misinformation from deepfakes to fake news. What will this do to the social fabric? How do we have to change what we teach, how we do research, and how we evaluate what gets published in the face of AI used to manipulate and alter facts? Can we develop algorithms that allow us to discern fact from fiction and truth from disinformation?

Reasons to Relax

Meta’s Chief AI Scientist is Yann LeCun. He is a graduate of The Sorbonne and a professor at NYU. He developed AI capable of recognizing handwriting and was a past winner of the Turing Award.

LeCun argues that “intelligence doesn’t necessarily equate to a desire for domination.” Is this human trait to use superior intellect the only way we act?

LeCun points out that not all humans use their intelligence this way and often act oppositely, He states, “The most intellectually gifted among us aren’t necessarily those seeking power. This phenomenon can be observed in various arenas, from international politics to local communities. It’s believed that those who aren’t as intellectually endowed might feel a greater need to influence others, perhaps as a compensatory mechanism. In contrast, those with higher intelligence can often navigate life relying on their skills and knowledge.” LeCun reflects on his own experience in leading a research laboratory, stating, “The most rewarding hires were those who brought more intellect to the table than myself. Working with individuals who display superior intellect can be enriching and elevating.”

Similarly, our future interactions with AGI assistants envisioned to be more intelligent than us will likely enhance our capabilities rather than diminish them. It won’t overthrow us but will rather serve to augment us. This relationship is analogous to a mentor and apprentice, where the AGI will play the role of a supportive and enlightening guide.

The notion of AGI’s desire for dominance stems from our understanding of social animals where hierarchical structures prevail. We exhibit this characteristic. But a better animal species to emulate, states LeCun, would be the orangutan.

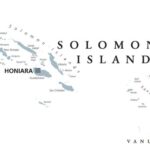

Orangutans are nearly as intelligent as us. They can operate machinery and use our tools as you can see from the above image. Yet they lack any notable desire to dominate because of their non-social and solitary nature.

So we can design our AGI to be like the orangutan by giving it no inherent ambition to dominate. LeCun says, “It is we, the humans, who will define the objectives for AI systems. These intelligent systems will then create subgoals to achieve these primary goals. However, the method to ensure that AI aligns its subgoals with our intended outcomes is a technical challenge yet to be fully resolved.” This area of AI research needs further development to ensure none of the concerns described above become the norm.

Imagine an AI that is a more interactive, knowledgeable version of Wikipedia, a platform that not only stores information but also infers, learns, and assists. This AI needs to be open and accessible to all and not controlled by a few private entities, A concern LeCun raises is that a small number of companies could end up controlling super-intelligent AI systems and, “They could potentially influence public opinion, culture, and more, leading to an imbalance of power and control.” That’s why from his Meta pulpit LeCun is advocating for AI systems to be open source much the way the Internet is today. This will allow for global contributions and oversight and ensure that AI becomes the repository of our collective knowledge shaped by inputs that span the globe.