In an article I wrote last year, I described how artificial intelligence (AI) may help us to communicate with alien visitors from space. With AI innovation in hyperdrive these days, it appears that our first attempts to unlock interspecies communication here on Earth are well on the way with wild dolphins and humans beginning to converse.

The latest AI to make headlines is DolphinGemma, a large-language model (LLM) system developed by Google to allow humans and wild dolphins to converse. DolphinGemma is a collaborative creation of Google DeepMind, the Wild Dolphin Project, and researchers at the Georgia Institute of Technology.

The Wild Dolphin Project (WDP) has been focused on two communities of dolphins in the Bahamas since 1985. Denise Herzing, the founder and research director at the WDP, has been gathering information on dolphin behaviour, social structure, habitat, and communication for thirty years. Her team has gathered a large database of carefully recorded vocalizations from four generations of Bahamian Atlantic Spotted Dolphins.

The two dolphin communities use whistles, squawks and buzzes to communicate not only within their groups but also outside of them, including in interactions with other dolphin species. Each sound serves as equivalent to an object or action as expressed by us in language. Signature whistles identify individual dolphins.

Dolphin communities are called pods. They exhibit complex social behaviours. The young learn from mothers, siblings, peers and elders. Juveniles babysit younger members of the pod. As dolphins mature, their social roles and responsibilities change.

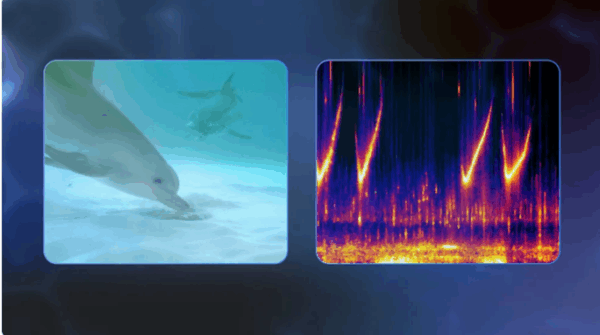

Google DeepMind has trained using the WDP-gathered dolphin vocalizations that provides labelled sounds linked to specific dolphin identities, behaviours and objects. The result is DolphinGemma, an AI model that uses Google-developed audio technology to decode dolphin communication. A Google neural audio technology, the SoundStream tokenizer converts raw audio into discrete tokens, enabling efficient audio compression, analysis, and generation.

How does it work? SoundStream has three different technologies: an encoder, a quantizer, and a decoder. The encoder transforms input audio into a compressed, coded signal. The quantizer then turns each signal into discrete tokens. The decoder reconstructs audio from these tokens. The AI recognizes audio-in and audio-out sounds to predict the next likely dolphin sounds in a sequence. This is similar to the way LLMs predict the next words in a sentence.

DolphinGemma can be run on a Google Pixel smartphone. WDP is deploying the technology in its field research to confirm the AI’s predictive effectiveness in uncovering structural meaning within dolphin language.

But how do we talk back? This is where the Georgia Institute of Technology’s research is paying dividends. CHAT, an acronym for Cetacean Hearing and Telemetry, is an underwater computer designed by Georgia Tech researchers to establish two-way communication between humans and dolphins using a shared vocabulary. CHAT recognizes dolphin whistles in real time and translates these sounds into human language. A diver outfitted with CHAT can then provide a suitable response.

DolphinGemma is still at a foundational stage with a limited vocabulary of a few words. But the work with the dolphins will enlarge this vocabulary over time. Today, we understand when a dolphin identifies sargassum, seagrass or even scarves worn by divers. In the future, we will understand and talk much more.

For interspecies communication, DolphinGemma is a first step. In future, AI could help us to decode bird song, insect communication and other species’ sounds. Using AI, we may develop whale dialects and communicate with them to warn when ships are approaching for collision avoidance. The technology could allow us to identify distress calls from species or other vocal pattern changes that can indicate specific environmental stressors. In talking to the animals, we humans may be able to do real-time interventions and corrective actions to mitigate the threats from nature and ourselves.

We know that animal species behaviours are not limited to sounds, so we still have a long way to go to be Dr. Doolittle. For that capability, we will need AIs that not only pick up on sound but also interpret body language using data collected by sensors in real time. That’s an entirely different technical leap.

At least, however, with DolphinGemma, we are taking a first step to converse with another inhabitant of our planet. It is just the first step. What will follow may help us to understand the sentient world that surrounds us and any future visitors that choose to call on us here on Earth.