December 28, 2013 – I’ve been reluctant to write about technology and war on this blog site with 16 out of over 700 looking at the subject. But this is an elephant in the room of technological innovation that cannot be ignored. So let’s look at one 21st century innovation that is changing the face of death and war dramatically – the rise of killer robots.

Why is the military falling in love with robots?

- You don’t have to feed or clothe them.

- You don’t have any of your personnel die when fighting a battle.

- Robots work 24 x 7 – no sleeping on the job.

- Robots don’t question orders.

- Robots when they die don’t feel the pain.

It is scary to think about how much easier it becomes to engage in warfare when you look at all these advantages. But what happens when you apply artificial intelligence (AI) to the equation? Can robots be designed to make their own decisions on whether to kill or not? Is this where we are heading as we develop even more sophisticated intelligent machines?

Autonomy of action by a robot in a military setting may serve us well in search and rescue scenarios but would it be a war crime should a robot with AI be given discretionary capability to make lethal decisions on a battlefield?

These are among the many questions that those developing AI and autonomous machines are asking. And when you consider that DARPA, the U.S. Defense Advanced Research Projects Agency, is among the major investors in AI and robotics, it seems that cognitive awareness and independent decision making are military objectives that the United States is seeking for its forces in the 21st century.

Imagine an autonomous, crew-less tank that identifies all friendlies by electronic RFID tags and based on its programming proceeds to destroy all others human life assumed to be the enemy within its proximity.

Although we are not there yet we are not too far from that future. That should be a worrying thought. Autonomous robots should not be designed with a kill function.

The state of today’s robot killing machines, the drones we read about that are used to target individuals and groups from the air, still have a human handler making the decisions. Mistakes are made regularly by these humans who mistake a wedding party in Yemen for an Al-Qaeda terrorist cell, or bomb peaceful civilians rather than terrorists because of faulty intelligence. Are the humans who make these mistakes accountable? It seems today that they are not. No soldier sitting at a video display terminal with a joystick gets hauled before the International Criminal Court for killing defenseless people thousands of kilometers away. If we can’t hold the handlers accountable how would address autonomous AI robots who make killing decisions that turn out to be wrong?

And finally electronic intelligence can be hacked. We see the hacking of websites and industrial computers in the news from time to time. Iranian uranium enrichment centrifuges were destroyed by the introduction of a computer virus. What would happen if a virus or coding error resulted in a killer robot turning on its own? What would happen should a design error in production lead to a battlefield catastrophe? Then who would be accountable?

Today the U.S. Department of Defense has an autonomous and semi-autonomous weapons policy in place. Among its many provisions are the following principles:

- Humans will have control over the deployment of these weapons for battlefield use.

- Weapons will be designed with anti-tamper mechanisms to ensure that should a failure occur no unauthorized party could assume control.

- Weapons will be designed with traceable feedback systems to ensure accountability.

- Operators responsible for these weapons will follow the laws and conventions that govern war including all applicable treaties and rules of engagement.

- Weapons used to apply lethal force will not have autonomy to target any individuals beyond those selected by their human handler even in the event that communications are severed.

- Weapons may defend human occupied installations and machines to intercept incoming hostile weapons with the exception of selecting humans as targets.

- Weapons may be used to apply non-lethal force against enemy combatants while under the direction of human handlers.

- Exceptions to any of the above will require Under Secretary of Defense approval.

- Sale of weapons will be approved based on existing technology security and foreign disclosure processes.

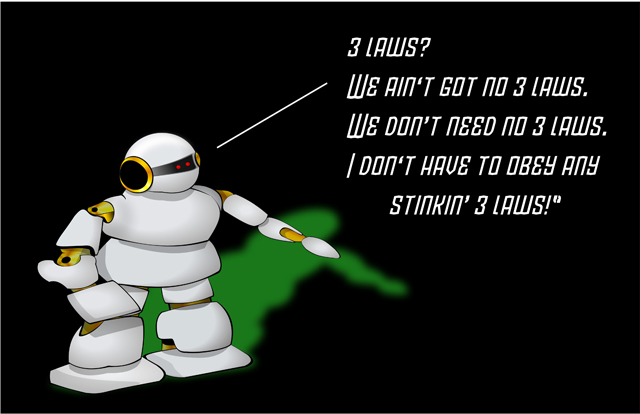

I don’t know about you but I take little comfort in these provisions. At least Asimov’s three laws of robotics and the five conjured by AI designers in the United Kingdom place some moral constraints on robot behaviour. I am more inclined to agree with UN expert, Christof Heyns, who back in May of this year called for a moratorium on the production, testing and development of armed robots stating “war without reflection is mechanical slaughter,” and “a decision to allow machines to be deployed to kill human beings worldwide, whatever weapons they use, deserves a collective pause.”