Can an artificial intelligence application predict when and where a medical emergency is about to happen? In Israel after October 7, 2023, emergency medical personnel rosters were depleted when the Israel Defense Force called up reserves to fight Hamas in the Gaza Strip.

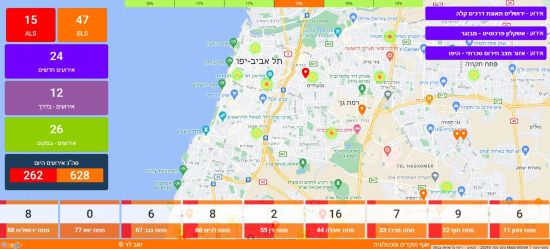

United Hatzalah is a volunteer emergency medical response organization that works with Israel’s Red Cross equivalent, Magen David Adom. When facing a loss of staff, United Hatzalah instituted a new AI response system with help from Israel’s intelligence network.

The AI was fed 18 years of historical emergency data coming from specific cities across the country. A three-month pilot project produced results with 85% accuracy in predicting the time and location of emergencies. United Hatzalah claims the AI reduced response times to 90 seconds in cities.

The AI system used historical data, weather conditions, terrain, time of day, and other factors in making its predictions. It identified high-risk areas and sent out alerts to reposition emergency responders in anticipation of an incident.

For those who remember seeing the 2002 Steven Spielberg movie, Minority Report, this emergency response predictor feels like science fiction turned into reality. In that movie the AI was used to predict where and when future crimes would happen and who would commit them. The police intervened before the act. Of course, if the system had been only 85% right then many would have been arrested for crimes they would have never committed.

The AI uses machine learning algorithms to identify statistical patterns and trends it finds in data fed to it. It begins with the data, examines underlying patterns and trends, calculates probabilities based on perceived patterns and then makes predictions. It continuously learns as new data is fed to it so that over time it can become more accurate.

Is 85% accuracy a significant leap over a human operator manning a central dispatch centre that can see information as it is reported in real time? The 85% threshold is a common industry standard. You see it in lots of business literature. Customer service satisfaction statistics use that number as a top-tier performance criteria threshold. In warehouse operations, 85%, whether rating efficiency or occupancy, is considered less than satisfactory.

More about 85% as a measure.

Consider voice recognition systems. My first encounter with voice recognition, a form of AI, happened in 1989. I began using DragonDictate and was asked to develop applications to work with it. At the time, DragonDictate was the state-of-the-art for voice-to-text dictation. It worked with word processing and other computer software applications.

My experience with DragonDictate taught me something about 85% as a measure. What 85% equals is missing 3 in 20 words. When first training voice recognition it takes hours to get to the 85% accuracy threshold. Even when you reach it means dictated words may be misunderstood or come out wrong. Imagine if three words are wrong in a 20-word sentence. That’s 85% and could mean a missed negative or term which alters the meaning of the sentence into one never intended by the author.

Another way to look at 85% accuracy as a baseline is to consider if this is the best the AI will ever do. Can it produce 85% or better reliability repeatedly? Being right 85% of the time when life and death are on the line is not ideal.

I have few doubts that AI machine learning can be a good predictive tool. I know this because in 2020 I wrote about BlueDot, an AI software company born out of work being done by Dr. Kamran Khan at St. Michael’s Hospital in Toronto. Khan and a team of software programmers developed an AI algorithm capable of scanning unstructured content gleaned from the web, using it to predict the spread of disease.

What is meant by unstructured content? This includes posted news stories, online texts, blogs, airline ticketing information and other non-database information sources. Most AIs are trained on structured databases. That’s the food of Large Language Models like ChatGPT, Gemini, and the like. But Khan’s software was different. The system used a natural language processor similar to the one I had been using in 1989 for voice recognition. That processor didn’t need to learn from structured data. It could randomly read content off the web and as early as 2009 predicted a global influenza pandemic, the first of the 21st century. In 2014, it predicted the spread of Ebola in West Africa and in 2016, Zika in Brazil and then Florida. For COVID-19, Khan’s AI knew nine days before the World Health Organization that a pandemic was going to sweep the planet starting from Wuhan and spreading to the rest of China, then Thailand, Korea, Taiwan, Japan, the Americas and Europe.

As for the United Hatzalah AI app, it would be nice to see repeatability beyond a three-month pilot project. Can the software exceed the 85% threshold? If it can, then it may prove better than any human prognosticator.

I must admit it’s a bit freaky to think that an AI may one day save a life because it anticipates a threatening situation and sends an emergency medical team to that person’s door before he or she even knows what is about to happen.