Imagine a robot companion that cleans up in the kitchen, loads the dishwasher, wipes the table and counter, makes your bed and picks up your bathroom towels. Would this appeal to you? Well at the Robot Learning Lab in the Computer Science Department at Cornell University in Ithaca, New York, a team of teachers and their students are doing more than just imagining. They are researching and developing robotic capabilities in perception and manipulation to develop personal robots that can become common everyday helpers at home and at work.

What’s involved?

- Anticipation – teaching robots to interact with humans and anticipate our behaviours is a critical skill. A robot with this attribute would need the ability to observe human activity and then determine potential futures over a period of time. Because humans can be unpredictable this means the robot needs not only to predict a behaviour but also react in the appropriate manner if that behaviour is not the one that ensues.

- Organization – teaching robots to understand how humans live within a physical space for the purpose of assisting in managing daily activities. This may or may not involve a human being present. In the presence of a human the robot needs to understand human-object relationships – where the human may sit on a couch, what items go in the fridge and not the dishwasher, and so on.

- Detection and 3D Scene Understanding – teaching robots to recognize unstructured human activities within the context of environments in which humans and robots interact. What are the anticipated tasks involved in different human environments, for example, a living room, an office, a kitchen or a bedroom? The robot in performing assistive tasks combines anticipation, object recognition and spatial and temporal understanding related to changing scenes and human behaviours.

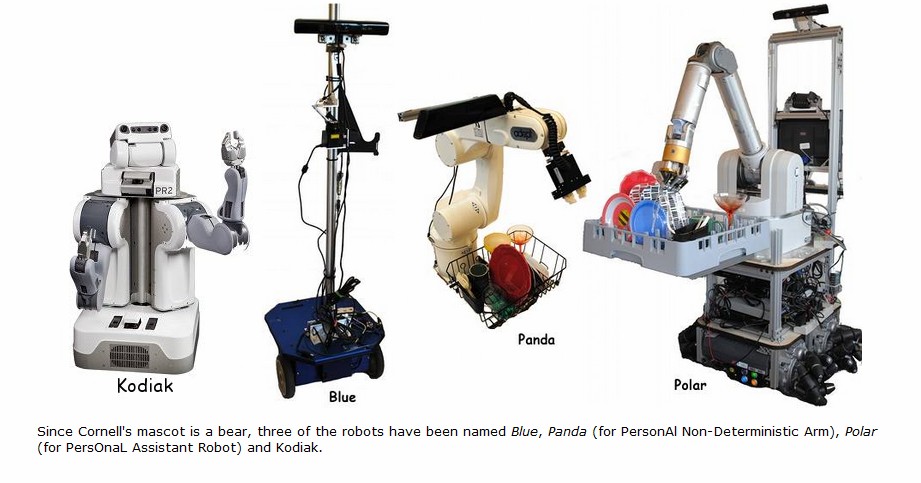

These are just three of the many attributive projects the Learning Lab is tackling using Kodiak, Blue, Panda and Polar, the current test robots, seen in the videos in the links provided, being put through their paces by the team led by Ashutosh Saxena, an Assistant Professor and Microsoft Faculty Fellow at the university. The ultimate goal will be the creation of robots that operate autonomously in unstructured environments.

In tests so far the robots have made correct predictions 82% of the time when looking one second into the future. At three seconds accuracy drops to 71%, and at ten seconds, to 57%. So the Lab still has a long way to go in designing artificial intelligence that can predict human unpredictability. The key will be to develop intelligence built on observation over time and the end result will be the robot companions we have read about in works by authors such as Isaac Asimov.