October 9, 2014 – As IBM finds new applications in medicine and buisness for Watson, its Jeopardy-winning computing technology, it is quantum physics and its application to robotics that may make artificial intelligence mobile and portable. That would not describe Watson whose physical presence on the Jeopardy set appeared small disguising the fact that the essence of the system was a room containing an array of racked computing technology.

What Quantum Physics May Have to Do with Robotic Learning

In the journal Physical Review X in July 2014, researchers from Universidad Complutense in Madrid, Spain, Universitat Innsbruck, Innsbruck, Austria and the Ruder Boskovic Institute in Zagreb, Croatia, published a paper in which they stated that quantum physics can dramatically enhance computing and information processing which can also be applied to designing autonomous learning agents for robots. This form of artificial intelligence learns in a dynamically changing environment and grows its knowledge from interaction within it. This is far different from designing a piece of software code and relies on artificial neural networks to achieve continuous learning.

How can quantum physics help in the design of neural agents? Quantum computing is not limited to the “0” and “1” choices of control-gate technology which is the programming design logic behind the computer on which I am writing this posting. Instead quantum states can be both “0” and “1” or somewhere in between. It is this fuzziness that makes quantum-computing technology the ideal brain for robotic learning, achieving new knowledge from what is observed and tried, similar to the way we humans and many other animal species learn.

The authors call their artificial intelligence model projective simulation (PS). PS uses episodic and compositional memory (ECM)to simulate future actions before real ones are taken. In this way the learning agents use quantum physics as the mechanism for internally processing all previous experiences and then reflecting and determining how to apply what has been learned to new circumstances. The result, artificial intelligence that outperforms control-gate technologies in executing complex tasks in a multitude of environments.

Applying Repetition to Robotic Learning

Think about how athletes in team sports learn. They master physical skill sets to ensure their bodies can withstand the stresses of the sport. Then they practice. In football they call the practice, reps, standing for repetition. In baseball, players take batting practice, and pitchers throw in the bullpen.

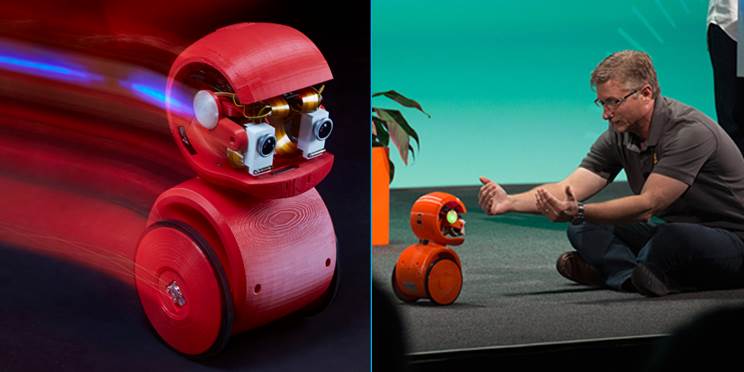

A new start-up company, Brain Corporation, in San Diego, California, is taking that model and applying to teaching robots rather than programming them in the conventional way by writing tons of code. The company intends to build robots that learn and adapt by providing it with software algorithms that allow it to become teachable. A human could then teach a robot to perform one or more tasks through repeated demonstrations with the robot following along. After a few repetitions the robot can then perform the tasks on its own.

Brain Corporation isn’t out to build the kind of artificial intelligence capable of projective simulation and compositional memory. Instead its robots would be low-cost and capable of doing repetitive work. The company is already building circuit boards and using a mobile processor developed at Qualcomm to house its BrainOS. First delivery to robot designers is anticipated before the end of this year.

New Standards Set for Robots Interacting with Humans at Work

ISO is the acronym and brand for the International Organization for Standardization, responsible for publishing environmental, quality, safety and many other internationally recognized operating standards. The arrival of robots in the workplace, like Baxter, capable of working side-by-side with humans is leading to the need to establish safety standards covering these interactions.

A recent conference on collaborative robotics in San Jose, California, presented a lively discussion on the rules of robot-human engagement on the factory floor and in office settings. The ISO wants to come up with a safety standard within the next year in anticipation of more Baxter-like robots joining workforces around the world. The kinds of standards being considered include what is the maximum amount of force allowed when a robot exerts when working beside a human. As one participant stated, “a bruise a day” would be an unacceptable standard for a human to receive from a robot partner.

The kinds of controls that robotic designers are considering to ensure human safety in the presence of mechanical coworkers include sensors that let the robot perform at a higher rate when no humans are present and have it slow down when they are in proximity.