As computers evolve in the 21st century, we will move from the silicon-based technology that dominates today to entirely new technologies. Two technologies that have seen considerable press are quantum computing and neuromorphic chips. Before we explain these two let’s review the 60 plus year evolution of the computer.

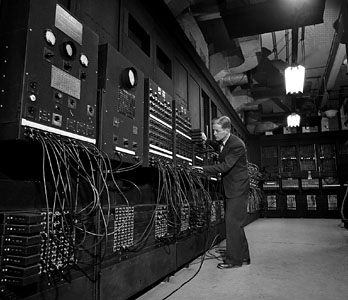

Current computers use chips made of silicon. The first computers were mechanical devices used for astronomy, clock management, astrology and navigation. Often called analog computers they reached the height of sophistication as tools for military application in World War II. In 1944 mechanical-electronic hybrid computers appeared. But the ENIAC in 1947 represented the first truly electronic computer. ENIAC used arrays of vacuum tubes, occupied a whole building and generated a ton of heat.

The dominating logic of ENIAC and its mechanical predecessors was based on control gates. A gate was “open” or “on” or “closed” or “off.” Eventually these two states were translated into numeric values, binary digits or what we call bits. A bit could have one of two values, a 0 or a 1. Computer programs were constructed around this precise logic. A string of “0”s and “1”s in groups of 8 became binary code, the basis of modern software programs.

The adoption of silicon and the arrival of transistors in the mid 1950s allowed the beginning of the miniaturization of the technology. Computers got smaller and used less energy because transistors didn’t need the same requirements as vacuum tubes. The development of the microchip, an integrated circuit containing transistors, resistors and capacitors showed up in the mid 1960s.

The advent of the technology we know today, microprocessors, appeared in 1971. This was truly the dawn of the personal computer age, led by such pioneers as Gordon Moore, of Intel, who in his now often quoted “law” predicted that the number of transistors that could be placed on a microprocessor chip would double every two years. Moore’s Law has proven to be pretty accurate. The first microprocessor, the Intel 4004, contained 2,250 transistors. By the advent of the Pentium IV, in 2004, a single chip could contain 42 million transistors. Present day advanced silicon chips contain as many as a billion transistors. But the limits of silicon chip technology are being reached. As a result engineers are experimenting with new technologies including hybrid chips that combine silicon with other materials.

At the same time as chip technology is evolving, our bit based computer programming tradition is being challenged. Instead of computer information being written in binary codes, we are seeing experiments that are pushing us closer and closer to human-like thinking. This is where quantum computing and neuromorphic chip technology enter the picture.

Whereas binary logic is built on bits with values of “0” or “1,” quantum computing represents information as quantum bits, or qubits. A qubit can represent a “0” and “1” . This is called quantum superposition, the simultaneous representation of different informational states encompassing values of 0, 1 and all the possible values in between. Quantum computers, unlike the computers that we know and use every day, uses transmitting technologies at the atomic and subatomic level including photons, and electrons to transmit qubits. So a quantum computing platform can be very tiny and its power requirements can be miniscule.

If you haven’t had the pleasure of studying quantum physics then the following explanation may confuse you. Let me share with you a 2006 study done at the University of Illinois using a photonic quantum computer. A research team led by physicist Paul Kwiat, a John Bardeen Professor of Electrical and Computer Engineering and Physics, presented the first demonstration of inferring information about an answer, even though the computer did not run. The researchers reported their work in the Feb. 23, 2006 issue of Nature. “It seems absolutely bizarre that counterfactual computation – using information that is counter to what must have actually happened – could find an answer without running the entire quantum computer, but the nature of quantum interrogation makes this amazing feat possible,” stated Professor Kwiat. The announcement went on to explain that quantum interrogation, sometimes called interaction-free measurement, is a technique that makes use of wave-particle duality (in this case, of photons) to search a region of space without actually entering that region of space.

Let’s move on to another new technology, neuromorphic chips. In Part 1 of this topic I described the work of Dr. Kwabena Boahen, at Stanford University. Dr. Boahen is constructing a computer organized to emulate the organized chaos of the human brain. He has created the biology-inspired hippocampus chip. Although made of silicon, it represents the foundational technology for what will be a neural supercomputer called Neurogrid. The differences between Dr. Boahen’s silicon chip and the logical digital computer chips we use today are twofold. The neuromorphic hippocampus chip uses very little energy and it is deliberately designed not to be accurate in its calculations. Our normal computer chips make an error once per trillion calculations. The neuromorphic chip makes errors once in every 10 calculations.

This is very much in line with how our human brain works. Our 100 billion neurons fail to fire 30 to 90 percent of the time. Researchers blame neural noise as being the major contributor to these lapses. They also see the noise as being a primary contributor to human creativity. The neuromorphic chips also operate on the power equivalent to a couple of D batteries. The reason these chips use so little power can be attributed to the way they work emulating the wiring pattern of neural circuits. The current Neurogrid contains 45,000 silicon neuromorphic chips, each using 1/10,000 to 1/500 of the power of conventional systems. And unlike conventional computers, the chips fire in waves, creating millisecond electric spikes. To create a Neurogrid with the total brain of a mouse, Boahen will require 64 million silicon neurons. He hopes to have this machine operational by 2011 and consuming 64 watts of power to do its calculations.

What is the benefit of these neuromorphic chips? Unlike our familiar digital computer which always gives you the same answer to the same question, the neuromorphic technology uses a more fuzzy logic, looking at many different solutions, doing trial and error, finding new shortcuts, just like our human brain.

In Part 3 of this blog we will look at biological computers and the progress we are making using DNA rather than silicon as the basic construct in our pursuit of creating artificial intelligence.

[…] can quantum physics help in the design of neural agents? Quantum computing is not limited to the “0″ and “1″ choices of control-gate technology which is the […]

[…] Image: When will computers become more human? – Part 2: From Two Bits to Quantum and Neuromorphic Computi… (21st Century […]